Rethinking economics to better sustain prosperity and peace

Economic instability has been a fundamental source of social trauma and conflict throughout human history, as previous chapters have highlighted. Cultural Golden Ages—whether Jewish, Christian, Muslim, Asian, African, feudal, democratic, colonial, capitalist or Marxist/socialist—have all at some point left a trail of faltering or failed economies. Clearly, the root causes of economic growth and decline need to be better understood if human violence is to be reduced.

In this context, high-tech entrepreneuring has spawned valuable insights into how economic growth accelerates and is sustained. I’ll begin with a rhapsody of reflections on the topic from one of high tech’s most prodigious prognosticators. I’ll end with a somber drum roll from the seamy economic underside of all things digital.

Money Is Time

Many have heard the adage that “time is money,” but have you heard the reverse, that money is fundamentally a measure of time? Recalibrating economic theory to reflect this thought has been called the greatest breakthrough in 21st century economics. George Gilder, proven pundit of the digital age, explains:

The ultimate measuring stick of wealth is time. What remains scarce when all else becomes abundant are our minutes, hours, days and years. Time is the only resource that cannot be recycled, stored, duplicated, or recovered. Money is most fundamentally tokenized time. When we run out of money we are really running out of the time to earn more.[1]

William Nordhaus foreshadowed this insight with a defining study of lighting costs published in 1998. He found that the amount of light that took 1000 hours of labor to pay for in the early 1800s required just 10 minutes of labor in 1990, despite rising prices. Where did this dramatic shift come from? Nordhaus concluded:

The essential difficulty arises for the obvious but usually overlooked reason that most of the goods we consume today were not produced a century ago. [Conventional economic] price indexes miss much of the action during periods of major technological revolution. They overstate price growth.[2]

He might also have said conventional economic metrics understate gains in purchasing power that result from technology innovation.

Changing Time-Prices

A 2022 book, Superabundance, by Marian L. Tupy and Gale L. Pooley, expanded voluminously on Nordhaus’s work to show that personal purchasing power has grown steadily the last hundred years measured by time-prices—despite social upheavals, wars, pandemics and economic depressions.

Time-prices calculate the amount of time someone has to work to purchase goods and services for various products and services. Time-prices are calculated by dividing average local prices for benchmark goods and services by local wages or salaries. Such metrics can be reliably established for many individual nations and provide universal measures of purchasing power that offer more insight into what’s happening economically than traditional supply and demand analyses.

For example, Tupy and Pooley found that in India, the amount of time required to earn enough rice for one day’s meals in 1960 was approximately seven hours. By 2022, it had dropped to under an hour. A comparable daily amount of wheat in Indiana in 1960 dropped from one hour to 7.5 minutes in 2022.[3] Workers in both India and Indiana benefitted from improved purchasing power while global prosperity increased in the background.

Superabundance examines in depth how long American workers and salaried employees have to work to purchase standard measures of food, commodities and services. The authors found that during the hundred years from 1919 to 2019, Americans purchased these items with fewer hours of labor and had more money left for other uses. Tupy and Pooley refer to this as the “Personal Resource Abundance Multiplier.”

“We buy things with money, but we pay for them with time,” writes Pooley. Even Adam Smith locked into this principle in his book The Wealth of Nations that set modern economic theory in motion in 1776. Smith wrote: “the real price of everything is the toil and trouble of acquiring it…. What is bought with money…is purchased by labour.”

Pooley notes “labor is only part of the equation. The ‘toil and trouble’ of labor is really a measure of time.”[4] George Gilder explains: “Tupy and Pooley show that for more than a century and a half, measured by time-prices, resource abundance has been rising at a rate of 4 percent a year.” This leads to the conclusion that people are not the heavy burden on limited resources that many futurists believe, but are the very source of increased prosperity derived from innovation and better use of existing resources.

Although serious issues of global poverty, labor injustice and hunger obviously remain, time-price data demonstrate that technology innovation measurably benefits wide swaths of people economically across many nations and cultures. Even people in less developed countries benefit economically, as well as personally, from access to devices such as cellphones, personal computers and other digitally empowered products and services.

The Information Theory of Economics

Behind declining time-prices hovers an Information Theory of Economics born of the digital revolution. This theory fundamentally challenges conventional economic thinking by explaining how wealth creation stems primarily from knowledge that leads to innovation rather than from incentives or disincentives to buy and sell. Access to capital and material resources are certainly necessary, but knowledge and innovation are what directly drive economic growth and sustainability.

George Gilder asserts that economists from Adam Smith on have believed there’s no economic stability or growth without incentives. Says Gilder, “it is much harder to imagine the cascades of invention and innovation [that actually drive economic growth], so we reduce [economics] to responses to incentives.”[5] In his view, this likens economics to Darwin’s proposition that evolution is a product of “incentives” that favor survival selected from random mutations. By depicting entrepreneurs as oppressors, opportunists, clever bargainers or the product of randomly evolving bio-physics, economists tend to view growth narrowly, primarily as the result of capital accumulation or sheer population growth. Gilder notes:

The problem with Darwinian theory is that [what] survives is fit; what is fit survives. Such…reductionism also haunts modern economics. As a result it tells us little about the ideals, aspirations and patterns of behavior conducive to a good and productive society, where creativity flourishes…and wealth abound[s].

Evolutionary thinking, adds Gilder, focuses “on greed rather than creativity, on zero-sum competition for scarce material resources, rather than human ingenuity that produces abundance.” He summarizes effusively: “economics is not about order and equilibrium, but about creativity measured by disruption, disorder, economic growth and surprise—all done to the dance of time.”

Moore’s Law and Hyper-Moore’s Law

A well-known principle called “Moore’s Law” exemplified the information theory of economics in action when the digital era began to unfold. In 1965, Gordon Moore, cofounder of Intel, observed that the number of transistors manufactured on a circuit board was doubling approximately every year due to design and manufacturing improvements. It soon settled down to doubling every two years. This held true for sixty years. The new semi-conductor industry birthed a global economy that has expanded more than seven times since the invention of the microprocessor, reaching $140 trillion in 2017. The rising tide of smaller, more powerful computer chips lifted all economic boats.

Today, 12 trillion transistors can be integrated on a single 12-inch wafer, compared to only 8 transistors on the first silicon chips. Moreover, the digital world is on its way to using carbon derivatives in chip manufacturing.[6] Looming behind that is the prospect of quantum computing, which promises even greater exponential gains in processing speed and capacity poised to release renewed economic growth.

With the advent of AI, industry leaders began speaking about a “Hyper Moore’s Law,” where AI-enhanced computing performance doubles or even triples every year! It’s about making smaller, faster, smarter components connected across global networks function in harmony at nearly the speed of light anywhere on earth.

Knowledge gained from computing and software development reveals powerful principles for what Gilder calls:

…a new economic revolution that overturns the incentive-run mechanisms, materialist assumptions, scarce resources, and static demand-side models of [economists like] Adam Smith and Karl Marx. The new information theory is leading us to a new understanding of economics and a new era of abundance and creativity.[7]

At the heart of this revolution is recognition that we prosper as what we know increases while we progress along learning curves essential to increase prosperity for everyone.

Learning Curves and Knowledge Creation

Learning curves comprise what Gilder calls “the most fundamental fact of capitalist growth.” To cite a simple example, consider aluminum. Aluminum was once so rare it was far more valuable than gold. King Herod is said to have had dining utensils made by a smith who knew how to smelt aluminum ore. They were so valuable, the legend goes, the king had the smith executed so no one else could discover his secret!

Two millennia later, a twenty-year old student at Oberlin college in Ohio, Charles Martin Hall, set out to find a way to convert bauxite into usable aluminum. After nine years, he discovered how to do it by using an electric current passed through a liquid in a carbon-lined steel pot. In 1888, Hall founded a large scale aluminum smelting plant in Pittsburgh that eventually became Alcoa Aluminum Company. Today, we all can buy aluminum foil dirt cheap. Until Hall’s discovery, aluminum was the third most abundant element in earth’s crust, but useless as a metal. Learning made the difference.

To understand the pivotal role of learning curves in generating economic value, it’s helpful to grasp this Gilderism:

Under capitalism, capital migrates not to those who can spend it best [i.e., the uber rich] but to those who can expand it best [risk-taking entrepreneurs who advance critical learning curves]. To expand wealth—to enrich many lives—depends on knowledge and learning, not on mere [economic] incentives.[8]

In the information theory of economics, companies that take risks and learn by trial and error how to make successful new products and services account for far and away the bulk of economic growth. Gilder calls this the “real economy of knowledge.”

The Materialist Superstition

Against such useful knowledge, Gilder contrasts the “mirage of scarcity” advanced by dire narratives about income inequality, the scourge of environmental damage, and the absolute limits of earth’s natural resources. Recall here the Club of Rome’s belief that looming environmental crises are scapegoats useful for driving radical change. Gilder argues the earth’s resources may be limited, but knowledge about how to transform and use them is not. No scapegoat is needed when people are free to learn.

The “materialist superstition” is that wealth consists of things rather than thoughts, of accumulated capital rather than accumulated knowledge. While acknowledging that resource scarcities occur, even catastrophically at times, the superstition lies in defining economic potential primarily through the lens of scarcity.

Critical scarcities certainly need to be addressed, but fixating on resource limitations misses the fundamental source of resource multiplication, namely, human freedom to learn, create and innovate.

Digital Progress Empowers Individuals on Fringe

When personal computers came on the scene they decentralized information processing and displaced reliance on massive mainframe computers that needed tons of cooling. Not long afterward organizations began to decentralize work by pushing decision-making down from slow-moving bureaucratically encumbered administrative centers supported by mainframe computers to personal computer-empowered individuals laboring in the ranks of corporate hierarchies. In a metaphoric sense, networked organizations became “cooler” and more effective than huge functionally overheated hierarchical organizational dinosaurs.

Today, portable devices and networked information enable people to perform complex tasks out on the fringe, from literally anywhere. AI extends the decentralization principle even more. Technology futurists believe the global prosperity created by these innovations will make past technological revolutions seem antiquated. The benefits won’t simply affect investors and entrepreneurs but entire industries, economies, institutions, governments and fundamental ways of life.

Gilder concludes: “Costs will plummet and productivity soar not only in the semiconductor industry but across the world economy.”[9] As these economic efficiencies progress, decentralization of power and decision making will also continue apace due to digital innovation. Which, of course, has both positive and negative implications.

The Underside of the Hopeful Digital Era

No hopeful story proves its mettle without dealing with disappointment, and the story of digital economic progress is no exception. Dangerous disappointments are not hard to see. One is significant environmental damage incurred by high tech manufacturing that often gets lost in rhapsodies of digital hope. A second is the global crisis of distrust that prowls the world looking for villains and scapegoats, as the Club of Rome intoned. Part of the subtext here is further enablement of the nefarious Dark Web where the dark side of humanity thrives in a shrouded digital ecosystem all its own. A third disappointment, or better stated a threat to societal stability, is the corroding effect on traditional human values that personal empowerment and social media provoke, substituting virtual realities and dangerous self-empowered fantasies for actual human contact and, yes, real love.

Here, I can only highlight these disaster zones, but they cannot be ignored when reckoning the ultimate cost of the digital empires being built by today’s high tech corporate rulers. These challenges cannot be ruled out as having the potential to bring digital hope to a halt, or at least slow it down, perhaps dramatically, while many people lag behind in personal growth and relational maturity.

The environment: The environmental costs of the dawning AI era include the residue of mining Rare Earth Elements (REE) used in making digital products and batteries. REEs are actually abundant but hard to separate from other elements, which is what makes them “rare.” Huge leeching ponds of toxic chemicals are needed. Until better methods are found, for every ton of rare earth, 2,000 tons of toxic waste are produced, including one ton of radioactive waste.

China is the world leader in REE production, as well as in creating “cancer villages” where many people have reportedly been found with cancer due to leaching pond pollution. Meanwhile, China is actively expanding production to Africa and elsewhere, trading infrastructure construction to nations, sometimes in exchange for REE mining rights.[10] While world resource abundance promises to expand, REE mining remains a serious blight on the digital dance of time extolled by Gilder. Even so, new REE production facilities are working hard to overcome these problems. In 2025, the U.S. announced a series of promising new approaches to REE extraction that addressed the toxic waste problem while offering lower cost production.

Big appetites for electricity: Huge amounts of electricity are needed to run vast data centers for cryptocurrencies, global network clouds and AI hubs. By 2026, electricity consumption from data crunching centers is projected to more than double from 2022. That’s equivalent to bringing onstream the electricity needs of an entire country the size of Sweden or Germany! Huge water-cooled facilities are needed for these data centers. Either how we produce power or how the digital world uses power has to change (probably both). Some believe carbon graphene holds great promise for both possibilities. Others point out that the more AI is used, the more demand it places on power consumption, possibly diminishing the positive effects of improved energy efficiencies.

The Global Trust Crisis

Possibly the greatest economic challenge of the 21st century is building trust while protecting freedoms essential for innovation. Trust fundamentally underpins economic growth, governance, and peace. Money works because of trust. Contracts are signed, commerce flows, and economies function based on mutual trust among parties. In an atmosphere of distrust, monetary systems lose integrity and require extensive monitoring, surveillance and control in order to function.

The World Economic Forum’s 2024 Global Risks Survey identified misinformation and disinformation as the most severe near-term global risks undermining trust, freedom, economic stability and world peace. Societies establish ethical frameworks and enforcement structures to build trust and protect against corruption, false information, and abuse of power. Despots employ the same tools to exploit technology for their own purposes—which brings us to the Dark Web.

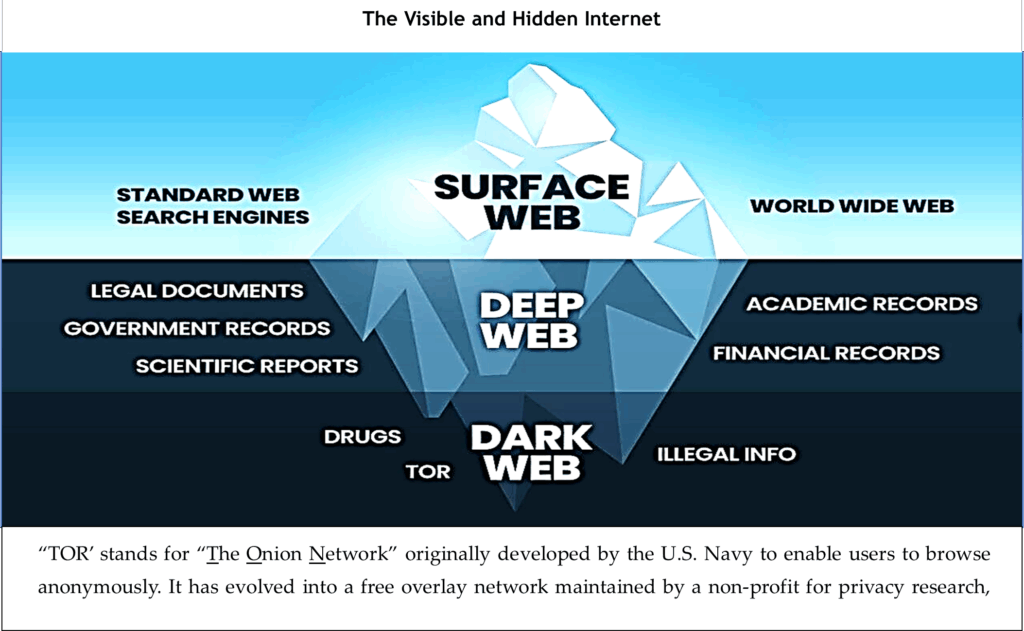

The Dark Web: The dark web is a part of the internet that isn’t indexed by open search engines. (See image, next page.) It’s believed to be 5,000 times larger than the visible internet. Around 60% of its content is used for illegal purposes, and 70% of online requests are believed to be seeking contact with criminals. Almost all transactions are conducted in untraceable cyber currency. In short, the Dark Web has become a hotbed of distrust and malevolence.[11] It must be taken into account when assessing the risks that threaten our evolving digital world.

Is Prosperity Sustainable Digitally or Otherwise?

As we have seen, true economic advancement requires balancing freedom to learn and create with shared values that maximize trust and enable the continuous flow of goods and services, including to the impoverished and needy. This is not simply a matter of capitalism in its best form versus socialism or communism in their best forms. It’s entrepreneurial creativity and financing propelled by recognition of the need for economic growth to reduce downward cycles of poverty.

A central challenge is to channel competitive energies into cooperative goals while optimizing freedom for as many people as possible. The globalists, autocrats and digital dreamers get this part right, but their systematic solutions are clouded by scarcity-based thinking, ever-present bad guys, and technology systems that have significant environmental impacts and remain vulnerable to failure, abuse and corruption.

China is an interesting case study in how authoritarian regimes walk this tightrope between freedom and control, as highlighted on the next page. But China is far from the only nation wrestling with boundary issues as hyper-economics and hyper-surveillance vie for dominance in the new economic globalism being re-shaped in the Donald Trump era.

No wonder human “purpose” is the thing Bill Gates said befuddles him most about the emerging AI Age. Defining human purpose has long been the domain of religion and spirituality, which I’ll turn shortly, after looking for a moment at the tipping point that erupted in Gaza.

The Chinese Economic Tightrope

China is a great example of capitalism’s power to restore a faltering economy, as well as the power of digital surveillance to dominate an entire population. Walking this tightrope between freedom and control has become an art form for the Chinese Communist Party (CCP).

After Mao’s Cultural Revolution failed economically, his successor, Deng Xiaoping, introduced “socialism with Chinese characteristics,” deferring the CCP’s goal of total communism to a distant utopian future. Deng privatized rural collectives, loosened other state controls, fostered small private enterprises, and began to allow foreign investments. China’s economy rebounded over time into what is now called the “Chinese economic miracle”—said to be the greatest economic recovery with the least amount of social violence in history. By making room for free market forces, the number of Chinese living in severe poverty is reported to have declined from 88% in 1981, to less than 1% in 2015—a compelling example of personal abundance multiplication even if the numbers are politically exaggerated.

The miracle faltered during the global recession of 2008, leading the CCP to strengthen mass surveillance in an effort to regain control. The CCP’s digitally-enhanced social engineering imposed a de facto social contract wherein China’s citizens give up personal data in exchange for “better governance” that, ideally, makes their lives safer, easier and better. If only that were true. It’s also the world’s leading surveillance state.

Today, China has the most oppressive digital surveillance in the world designed to suppress what it calls “ideological viruses.” China ranks first in total people under surveillance (1.44 Billion, 2024). It aims to make people feel watched even when they aren’t in order to intimidate behaviors. Categorizing individuals or groups as “extremists” or “terrorists” is enough to legally track their activities.

A mobile phone app available to some local police aggregates bio data such as facial and walking gait recognition, with home electricity use, package deliveries, travel habits, even which door people use to enter homes. The system categorizes peoples’ “religious atmosphere,” political affiliation, and use of over 50 “suspicious“ social media apps. It can identify 36 person types and alert local authorities to start an immediate investigation, even make arrests.

A hidden question is how far China is engaged in exporting its AI-empowered surveillance capabilities as it seeks to expand its global economic footprint? A similar tightrope is now in evidence in the West as nations struggle with the same tension between freedom and control. Many questions about walking this tightrope remain unanswered.

As banking and personal finance become more digitally integrated and “efficient” there is always a risk that government powers will surveille individual financial data and transactions and be empowered to monitor and even intervene in personal transactions based on political, religious or other variables while undermining personal freedom, just as China now does.

[1] George Gilder, Forward to Superabundance, by Marian L. Tupy and Gale L. Pooley, Cato Institute, 2022, p. xii.

[2] See http://www.nber.org/chapters/c6064. To dramatize his point, Nordhaus’s calculations went back to cavemen, for whom making fire to produce light consumed huge amounts of time.

[3] George Gilder, Life After Capitalism, Regenery Gateway, 2023, pp 16-17.

[4] Quote is from Pooley’s chapter “Economics Is Not About Counting Atoms,” in Gilder’s Life After Capitalism, pp 88-89. The chapter explains in detail the principles behind time-prices, as does Tupy and Pooley’s much more extensive Superabundance.

[5] Gilder, Life After Capitalism, pp 26-7. Gilder quotes on this and the next page are from this book which describes the Information Theory of Economics in great detail.

[6] Graphene is a form of carbon 1 to 2 atoms thick, 1,000 times more conductive than copper. It has a wide variety of uses, including making digital nano-components. Carbon is the 15th most abundant element in earth’s crust. No scarcity issue here!

[7] Gilder, Life After Capitalism, pp ix-xi.

[8] Ibid, pp. 24-25.

[9] George Gilder’s Guideposts, Moonshots, newsletters in January 2025.

[10] See “Not So “Green” Technology: The Complicated Legacy of Rare Earth Mining,” by Jaya Nayar, Harvard International Review, August 12, 2021. Here.

[11] See https://www.coolest-gadgets.com/dark-web-statistics/ Retrieved 1-27-25.

Leave a Reply